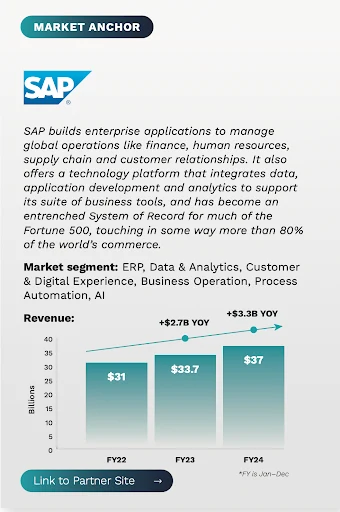

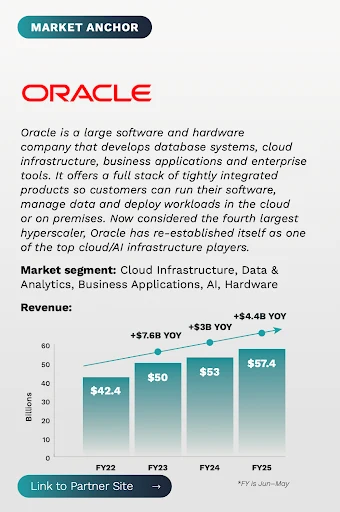

Over the past 50 years, Oracle and SAP have evolved from emerging innovators into foundational pillars of enterprise IT, building global empires that power many of the world’s largest organizations. These two legacy tech vendors may lack the flash of newer entrants, but they have steadily expanded their platforms and partner ecosystems over the years, creating what many might consider dull but durable growth in the process. It’s this durable growth that has kept Oracle and SAP firmly anchored in the Tercera 30 since its launch.

However, these software giants are anything but dull these days. Both are capitalizing on their scale and footprint among large enterprises to capture opportunities in the cloud and AI, delivering a performance jolt not only to the company, but also to their ecosystem of tech services partners.

In this article, we explain why these ecosystems are seeing a resurgence in the AI era, and why entrepreneurs and investors like Tercera are taking a closer look at these industry titans.

THE RETURN OF THE TITANS

Oracle and SAP’s rebirth is, in part, a matter of sunsetting the past. Both companies are phasing out support for their legacy on-premises solutions, pushing and pulling customers onto their more modern cloud platforms.

Oracle and SAP’s rebirth is, in part, a matter of sunsetting the past. Both companies are phasing out support for their legacy on-premises solutions, pushing and pulling customers onto their more modern cloud platforms.

For example, SAP is pushing all customers of its core on-premise ERP system, ECC, to its newer S/4HANA platform, setting a deadline of Dec 31, 2027 to create urgency. After Dec 31, it will no longer maintain support for the product. Oracle is pursuing a similar strategy with its legacy products, although has been less aggressive about ending support. All this is creating a wave of demand for large and lucrative enterprise migrations who depend on these systems.

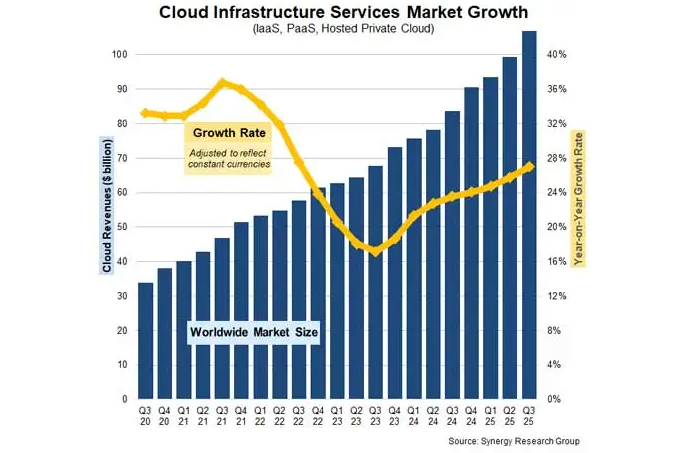

Both companies are leaning on their scale, footprint in enterprise IT and product breadth to move forward. Oracle has inked multibillion-dollar contracts with OpenAI, Microsoft, Facebook, and others to provide the cloud infrastructure required to train and run the world’s largest AI models at scale. It is also using its strengths in data, high-performance hybrid cloud infrastructure, and industries to land large new enterprise customers and expand its existing footprint. In its Q2’25 quarterly earnings call, Oracle surprised analysts by announcing four new multibillion-dollar contracts, which increased its remaining performance obligation (RPO) contract backlog by 359% to $455 billion.

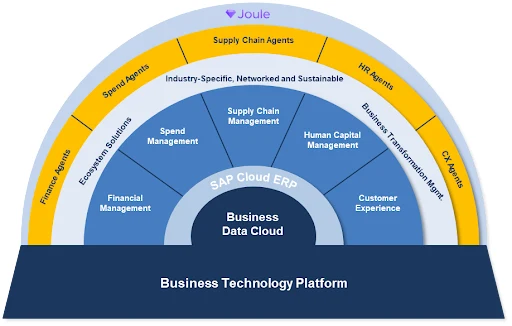

The stickiness of SAP’s ERP platform and its integration into mission-critical processes is giving the company a leg up in the AI game. The company has made a massive investment in modernizing its Business Technology Platform (SAP BTS) and core applications, and is building out AI capabilities on top of BTS with its GenAI tool Joule and a growing number of functional and industry-specific agents. SAP says they now have 400 AI scenarios enabled by Joule and more than 34,000 customers using its AI capabilities.

Oracle and SAP may have older platforms but that also gives them a competitive moat. Their deep customer relationships, integration into core business processes, substantial R&D budgets, and their deep seated regulatory expertise are not easy to displace, especially for smaller, less-seasoned competitors.

Their relationships with other AI and data leaders also adds to their moat. Oracle’s alliances with NVIDIA, OpenAI, Google and Meta mean that enterprises can run most large language models and agentic AI workloads directly on Oracle’s cloud infrastructure. SAP’s partnerships with Microsoft Copilot and OpenAI allow customers to embed its LLMs into applications via SAP Business Technology Platform. SAP has also deepened its partnership with Databricks, making Databricks a native component of SAP’s Business Data Cloud.

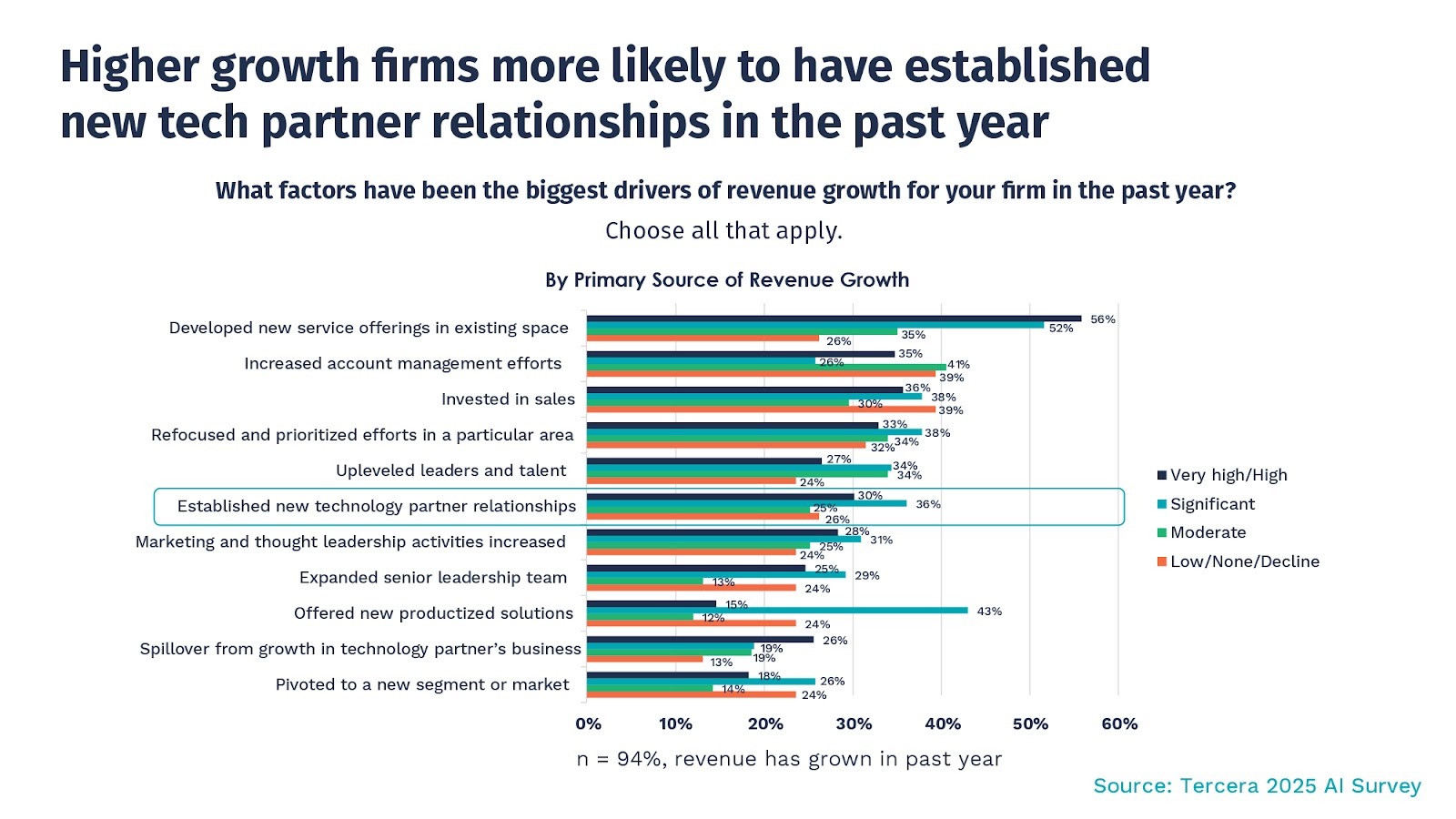

Partnerships with AI-native companies, and AI-native services partners, are essential as both companies reposition and reinvent themselves for the AI era.

These partnerships with AI-native companies, and AI-native services partners, are essential as the companies reposition and reinvent themselves for the AI era. They carry brand baggage as legacy players, which newer entrants will continue to exploit. We’re already seeing cloud-native startups attempting to chip away at their markets. Yet these behemoths are not going to go quietly and their moat is real.

THE OPPORTUNITY FOR TECH SERVICES FIRMS

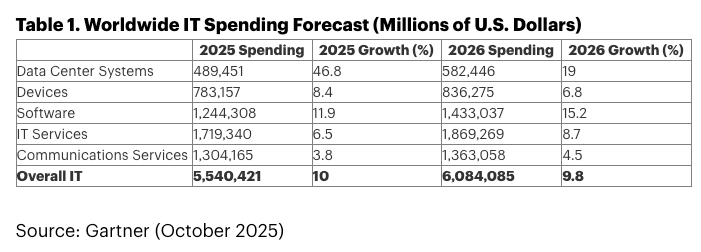

The biggest near-term opportunity for services partners is with migrations. The transition from SAP and Oracle’s legacy on-premises systems to cloud platforms is far more than a mere software upgrade. These are multi-year, multi-million dollar transformations that can ripple across every division and process a company runs. Doing this successfully requires untangling and reconnecting integrations that are deeply embedded into mission-critical systems. It also requires understanding and optimizing data across customized business processes, and orchestrating change across global teams.

The biggest near-term opportunity for services partners is with migrations. The transition from SAP and Oracle’s legacy on-premises systems to cloud platforms is far more than a mere software upgrade.

ERP systems are deeply embedded into business operations, and complex to migrate. Which is why a large majority of ECC clients have not yet migrated to S/4HANA. Yet the pressure is mounting. Organizations that don’t move onto these more modern platforms are at risk if vendors discontinue maintenance for their systems. They also won’t be able to take advantage of new tools and AI advancements, potentially putting them behind the curve.

SAP and Oracle do not have sufficient in-house resources to support all these migrations. We have heard from partners that SAP, for one, has raised its bar for the clients it directly supports from $1B to $3B in customer revenue, pushing smaller and midsize enterprises into the open arms of IT services firms.

For IT services firms, the opportunity presents a windfall of high-value work that could last a decade or more. Some work will extend well beyond the formal sunset dates. Helping organizations move to cloud and AI-enabled systems is a sizable journey, not a single event.

For IT services firms, the opportunity presents a windfall of high-value work that could last a decade or more.

Implementation revenue can spike in the initial 18 months, but the need for continuous improvement, support and training could provide a sizable recurring revenue stream. How significant is the opportunity? I’ve met several companies that say they can generate as much revenue from <20 clients on SAP’s S/4HANA as from 250 still on legacy systems.

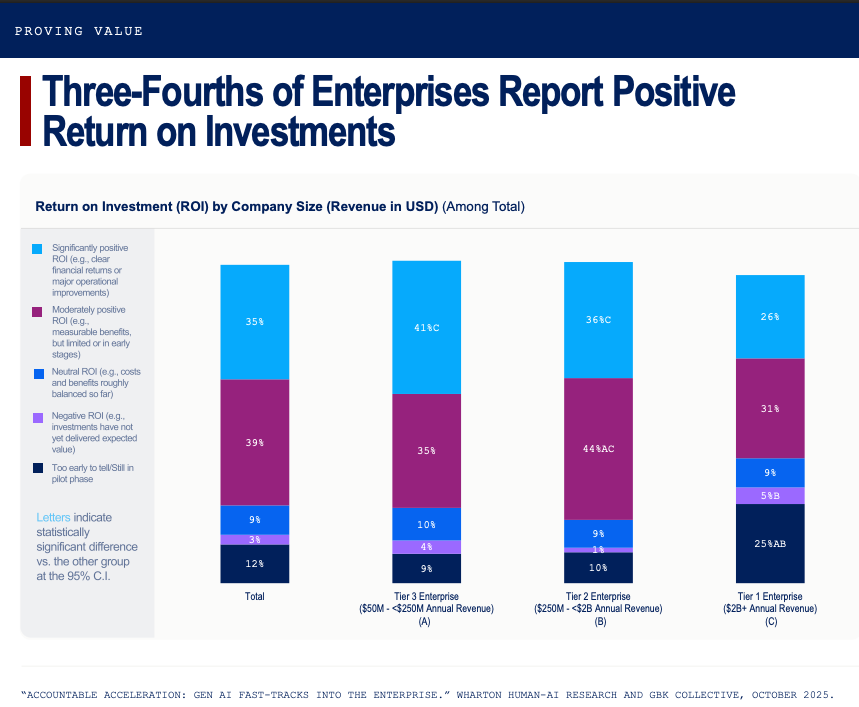

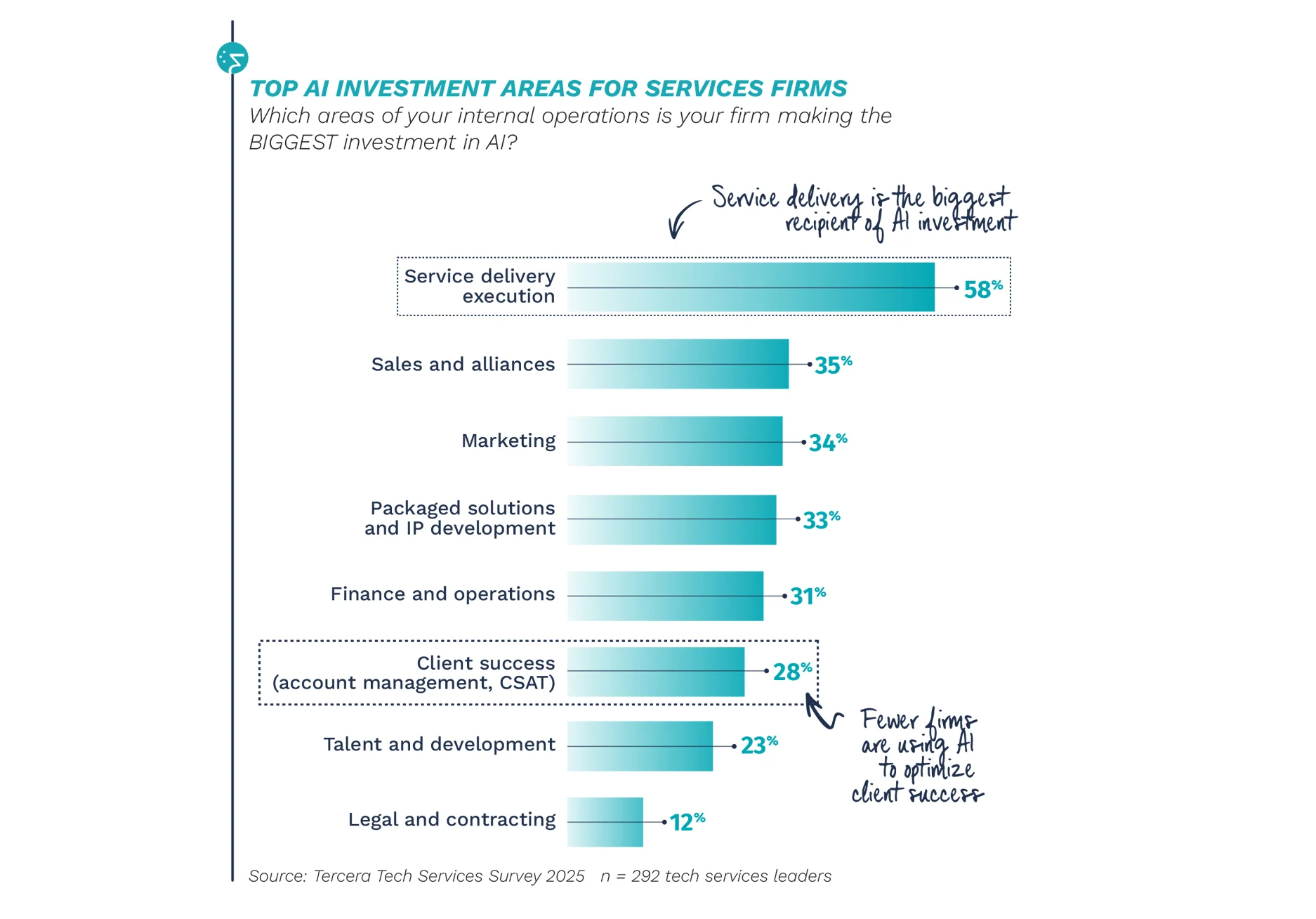

The AI opportunity extends far beyond migrations and implementations. Today, Oracle and SAP applications collectively house much of the world’s most valuable private enterprise data. This is decades of structured, governed data spanning finance, supply chains, manufacturing, HR, customer behavior, and regulatory operations.

The data in these systems is not only high-quality and deeply contextual, but also uniquely tied to real business processes, decisions, and outcomes. As AI shifts from generic models to domain-specific intelligence, these systems become a true treasure trove.

The data in these systems is not only high-quality and deeply contextual, but also uniquely tied to real business processes, decisions, and outcomes. As AI shifts from generic models to domain-specific intelligence, these systems become a true treasure trove. The foundation for training and deploying AI agents that can reason, predict and act within specific industries and workflows.

FROM UNCOOL TO UNMISSABLE

Coolness may be in the eye of the beholder, but power is measurable. Oracle and SAP have made deliberate bets that place them at the gravitational center of the AI era, arguably the most consequential enterprise technology shift of the decade. This should be a tailwind for the SAP and Oracle partners that can make the evolution alongside these vendors, and an opportunity for native AI firms looking for a place to play.

This should be a tailwind for the SAP and Oracle partners that can make the evolution alongside these vendors, and an opportunity for native AI firms looking for a place to play.

Both SAP and Oracle already have large, highly mature service partner ecosystems, yet the number of partners in both ecosystems is still growing – a testament to the opportunities ahead.

If you are a partner in one of these ecosystems, looking for growth capital to tackle this moment of opportunity, we’d love to hear from you here.

Read more about SAP and Oracle in the Tercera 30